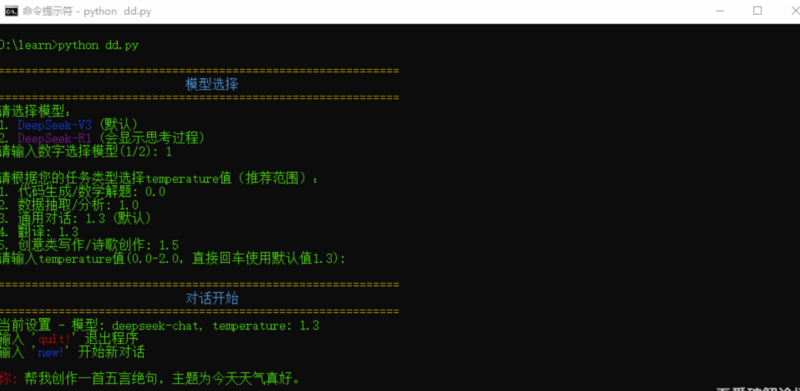

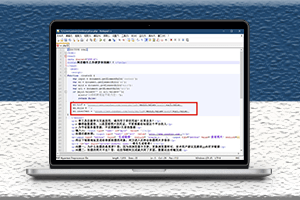

无聊写了一个命令行版的deepseek对话器,请按照源码中的说明替换为自己的API KEY(需要自己到deepseek官方申请),同时欢迎大家魔改。

代码如下:

# 安装必要的库(-i https://pypi.tuna.tsinghua.edu.cn/simple 是临时指定国内的清华源,加速)

#

# pip install openai -i https://pypi.tuna.tsinghua.edu.cn/simple

# pip install colorama requests -i https://pypi.tuna.tsinghua.edu.cn/simple

#

# 阿里云源:https://mirrors.aliyun.com/pypi/simple/

# 中科大源:https://pypi.mirrors.ustc.edu.cn/simple/

import requests

import json

from colorama import Fore, Back, Style, init

# 初始化colorama(自动处理Windows和Linux/macOS的终端颜色)

init(autoreset=True)

# 替换为你的 DeepSeek API Key

API_KEY = "★★★替换为自己的 DeepSeek API Key,保留前后引号!★★★"

# DeepSeek API 的端点

API_URL = "https://api.deepseek.com/v1/chat/completions"

def call_deepseek_api_stream(messages, model, temperature):

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json",

}

payload = {

"model": model,

"messages": messages,

"temperature": temperature,

"stream": True,

}

response = requests.post(API_URL, headers=headers, json=payload, stream=True)

if response.status_code != 200:

yield {"error": f"API 请求失败,状态码:{response.status_code}", "response": response.text}

return

full_response = ""

for line in response.iter_lines():

if line:

decoded_line = line.decode('utf-8')

if decoded_line.startswith("data:"):

data = decoded_line[5:].strip()

if data == "[DONE]":

break

try:

chunk = json.loads(data)

if "usage" in chunk: # 获取最终token统计

yield {"usage": chunk["usage"]}

yield chunk

except json.JSONDecodeError:

continue

def count_tokens(text):

"""简单估算token数(实际应以API返回为准)"""

return len(text) // 4 # 英文平均1token=4字符,中文约1token=2字符

def get_temperature_recommendation():

"""显示不同任务的temperature推荐值"""

print("\n请根据您的任务类型选择temperature值(推荐范围):")

print("1. 代码生成/数学解题: 0.0")

print("2. 数据抽取/分析: 1.0")

print("3. 通用对话: 1.3 (默认)")

print("4. 翻译: 1.3")

print("5. 创意类写作/诗歌创作: 1.5")

while True:

user_input = input("请输入temperature值(0.0-2.0,直接回车使用默认值1.3): ").strip()

if not user_input: # 用户直接按回车

return 1.3 # 默认值

try:

temp = float(user_input)

if 0.0 <= temp <= 2.0:

return temp

print("输入超出范围,请重新输入(0.0-2.0)")

except ValueError:

print("请输入有效的数字或直接回车")

def print_separator(title=None):

"""打印带可选标题的分隔线"""

sep = "=" * 60

if title:

print(f"\n{Fore.YELLOW}{sep}")

print(f"{Fore.CYAN}{title.center(60)}")

print(f"{Fore.YELLOW}{sep}{Style.RESET_ALL}")

else:

print(f"{Fore.YELLOW}{sep}{Style.RESET_ALL}")

def main():

# 模型选择

print_separator("模型选择")

print(f"{Fore.GREEN}请选择模型:{Style.RESET_ALL}")

print(f"1. {Fore.BLUE}DeepSeek-V3{Style.RESET_ALL} (默认)")

print(f"2. {Fore.MAGENTA}DeepSeek-R1{Style.RESET_ALL} (会显示思考过程)")

model_choice = input("请输入数字选择模型(1/2): ")

if model_choice == "2":

model = "deepseek-reasoner"

show_reasoning = True

else:

model = "deepseek-chat" # 默认V3模型

show_reasoning = False

# 获取temperature值(支持回车默认值)

temperature = get_temperature_recommendation()

# 初始化系统提示(提取到循环外以便复用)

system_prompt = "你是一个有帮助的AI助手。"

if show_reasoning:

system_prompt += "请逐步展示你的思考过程。"

# 外层循环,用于会话重置

while True:

# 初始化对话历史(每次新会话重置)

conversation_history = [

{"role": "system", "content": system_prompt}

]

total_tokens = 0

print_separator("对话开始")

print(f"{Fore.GREEN}当前设置 - 模型: {model}, temperature: {temperature}{Style.RESET_ALL}")

print(f"输入 '{Fore.RED}quit!{Style.RESET_ALL}' 退出程序")

print(f"输入 '{Fore.BLUE}new!{Style.RESET_ALL}' 开始新对话\n")

# 内层循环,处理单次会话

while True:

user_input = input(f"{Fore.RED}你: {Style.RESET_ALL}")

if user_input.lower() == "quit!":

print_separator("对话结束")

print(f"{Fore.CYAN}累计消耗Token: {total_tokens}{Style.RESET_ALL}")

return # 完全退出程序

if user_input.lower() == "new!":

print_separator("新对话开始")

break # 跳出内层循环,开始新会话

# 添加用户消息到对话历史

conversation_history.append({"role": "user", "content": user_input})

user_tokens = count_tokens(user_input)

total_tokens += user_tokens

print(f"{Fore.BLUE}AI: {Style.RESET_ALL}", end="", flush=True)

full_reply = ""

reasoning_content = ""

current_reasoning = ""

current_tokens = 0

is_reasoning_block = False

# 流式获取AI回复

for chunk in call_deepseek_api_stream(conversation_history, model, temperature):

if "error" in chunk:

print(f"\n{Fore.RED}出错了: {chunk['error']}{Style.RESET_ALL}")

break

elif "usage" in chunk:

current_tokens = chunk["usage"]["total_tokens"]

continue

delta = chunk.get("choices", [{}])[0].get("delta", {})

# 处理思考过程

if show_reasoning and "reasoning_content" in delta:

reasoning = delta["reasoning_content"]

if reasoning:

if not is_reasoning_block:

print(f"\n{Fore.CYAN}╔{'思考过程':═^58}╗{Style.RESET_ALL}")

print(f"{Fore.CYAN}║ {Style.RESET_ALL}", end="")

is_reasoning_block = True

print(f"{Fore.CYAN}{reasoning}{Style.RESET_ALL}", end="", flush=True)

current_reasoning += reasoning

reasoning_content += reasoning

# 处理正常回复内容

content = delta.get("content", "")

if content:

if is_reasoning_block:

print(f"\n{Fore.CYAN}╚{'':═^58}╝{Style.RESET_ALL}")

print(f"{Fore.GREEN}╔{'回答':═^58}╗{Style.RESET_ALL}")

print(f"{Fore.GREEN}║ {Style.RESET_ALL}", end="")

is_reasoning_block = False

print(f"{Fore.GREEN}{content}{Style.RESET_ALL}", end="", flush=True)

full_reply += content

# 结束当前输出块

if is_reasoning_block:

print(f"\n{Fore.CYAN}╚{'':═^58}╝{Style.RESET_ALL}")

elif full_reply:

print(f"\n{Fore.GREEN}╚{'':═^58}╝{Style.RESET_ALL}")

# 添加AI回复到对话历史

if full_reply:

if show_reasoning and reasoning_content:

assistant_reply = f"[思考过程]\n{reasoning_content}\n[回答]\n{full_reply}"

else:

assistant_reply = full_reply

conversation_history.append({

"role": "assistant",

"content": assistant_reply

})

reply_tokens = count_tokens(full_reply)

if current_tokens == 0:

current_tokens = user_tokens + reply_tokens

total_tokens += current_tokens - user_tokens

print(f"\n{Fore.YELLOW}[本次消耗: {current_tokens} tokens | 累计: {total_tokens} tokens]{Style.RESET_ALL}")

if __name__ == "__main__":

main()![图片[1]-自用命令行版DeepSeek对话器:构建你的智能助手](https://www.zywz6.com/wp-content/uploads/2025/07/d2b5ca33bd20250725131453-1024x501.png)

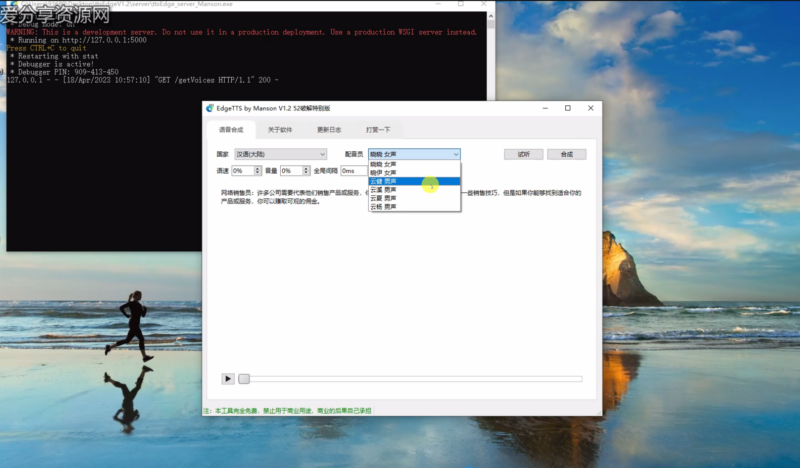

![图片[2]-自用命令行版DeepSeek对话器:构建你的智能助手](https://www.zywz6.com/wp-content/uploads/2025/07/d2b5ca33bd20250725131520-1024x486.png)

© 版权声明

THE END

会员专属

会员专属